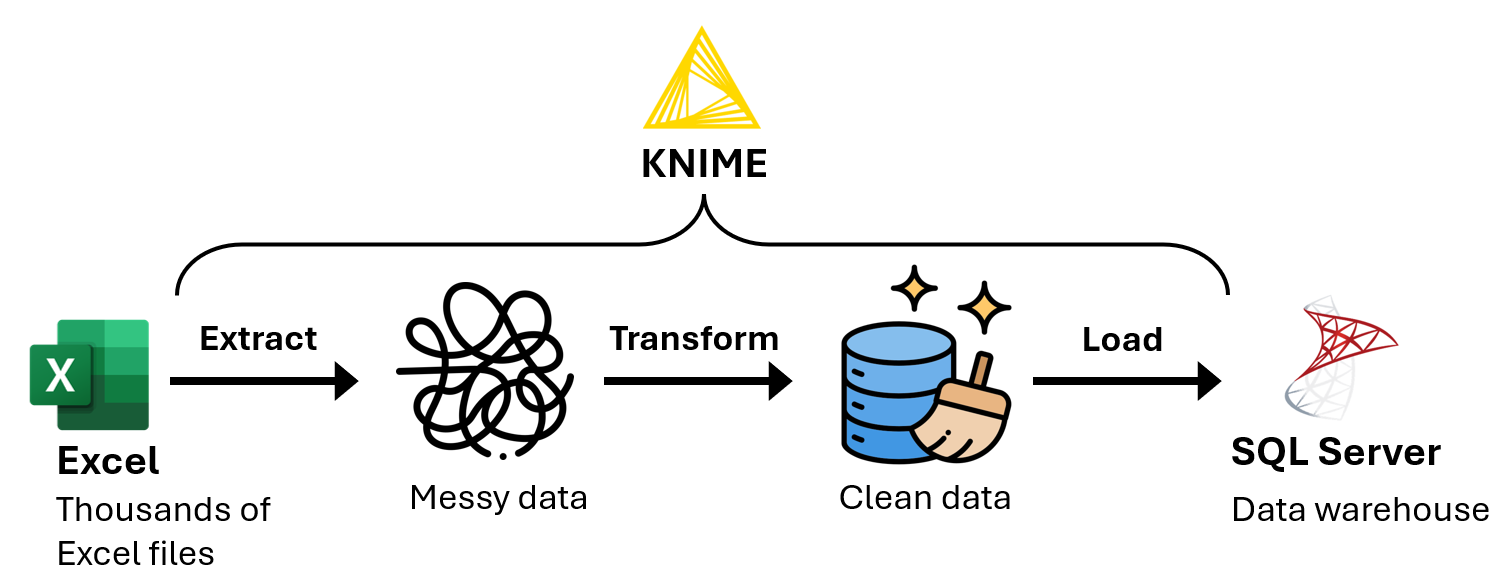

Automating Tilray's weekly ETL using KNIME and SQL Server

Summary

Problem: Every week, the analytics team used Power Query to transform and import new sales and inventory data (stored in over 30 Excel files) into their Power BI reports. After a few years, the Power BI report data refreshes became very slow, because Power Query was transforming data from thousands of Excel files during each data refresh.

Solution: I used KNIME to develop an ETL program to extract the data from the Excel files, transform the data, then load the transformed data into SQL Server. This allowed the team to import the data from SQL Server instead of from thousands of Excel files.

Results: The Power BI report data refreshes became 80% faster.

KNIME

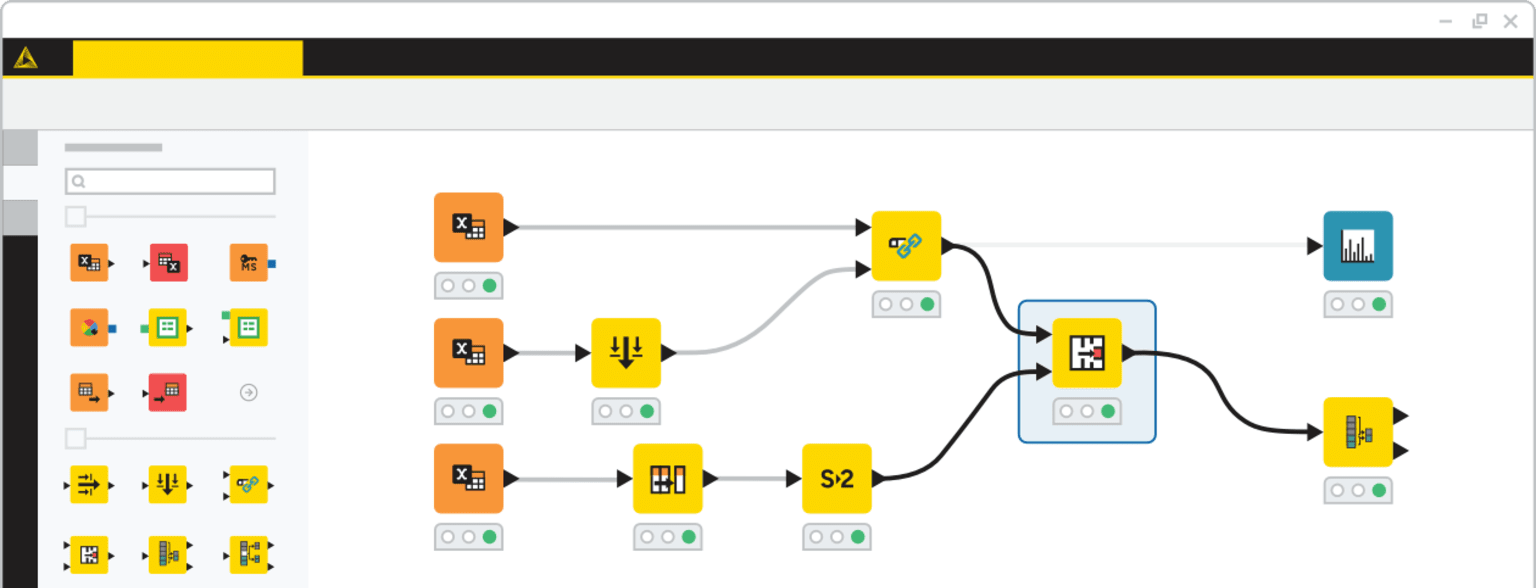

KNIME is a low-code data processing application where you can chain “nodes” to create programs. A node is like a function in a programming language.

Screenshot of KNIME.

Definitions

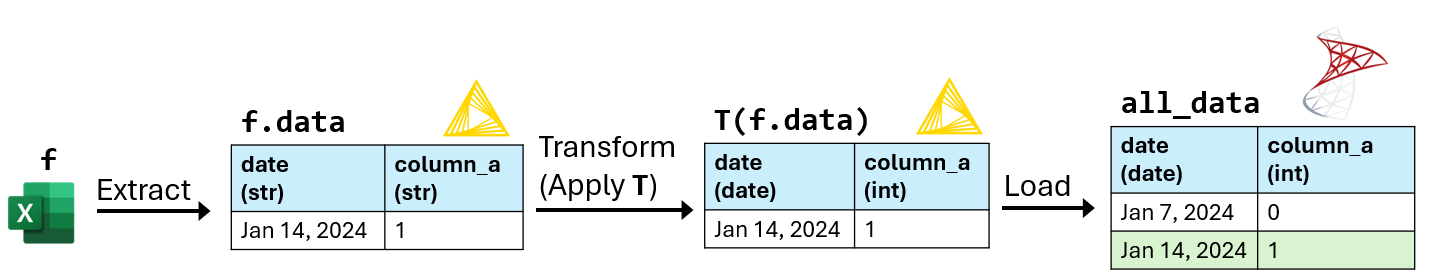

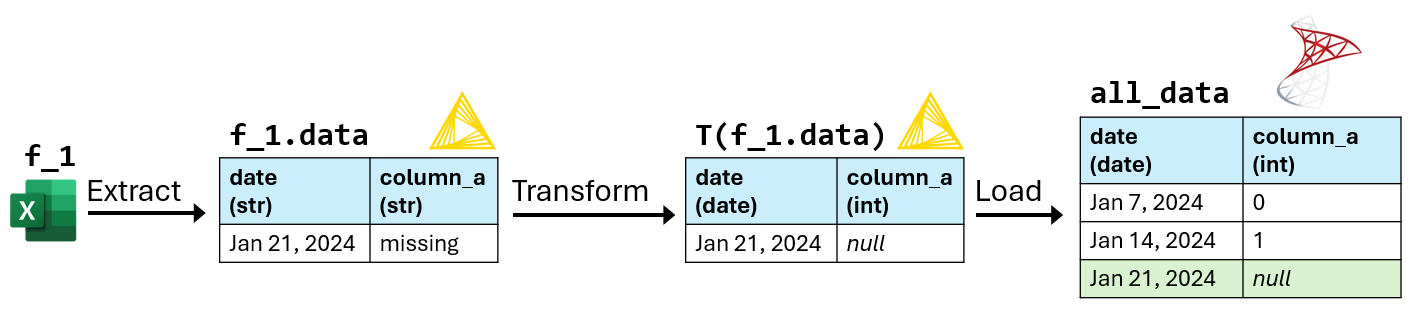

f- An Excel file containing sales and inventory data for one week.

f.data- The data table extracted from

f. All columns inf.dataare the string data type. T-

A sequence of data transformations. These include:

- Transforming column contents using regular expressions.

- Converting column data types.

T(f.data)- The resulting table after applying

Ttof.data. all_data- A database table that will store

T(f.data)for every Excel filef. - match

- A table

Amatches a tableBifAandBhave the same column names and data types.T(f.data)can only be loaded intoall_dataifT(f.data)matchesall_data. all_file_names- A set of all the Excel file names.

loaded_file_names- A set of the Excel file names

f.namesuch thatT(f.data)has been loaded intoall_data.

Pseudocode of the ETL program (similar to Python)

Algorithm ETL_program(file_names): Input: file_names: A set of Excel file names. Output: A dictionary of (file name, error message) items. not_loaded_file_names = file_names − loaded_file_names. file_name_to_error_message = dict(). for each Excel file name f.name in not_loaded_file_names: f.data = Extract the data table from f. try: T(f.data) = Apply each transformation in T to f.data. Load T(f.data) into all_data. except Exception as error: file_name_to_error_message[f.name] = str(error). else: Add f.name to loaded_file_names. return file_name_to_error_message.

After executing file_name_to_error_message = ETL_program(all_file_names), I execute the following steps manually:

for f.name, error_message in file_name_to_error_message.items(): Use error_message to update the data transformations in T. Execute ETL_program(set(f.name)).

Example execution of the ETL program

T:-

- Convert

dateto the date data type. - Convert

column_ato the integer data type.

- Convert

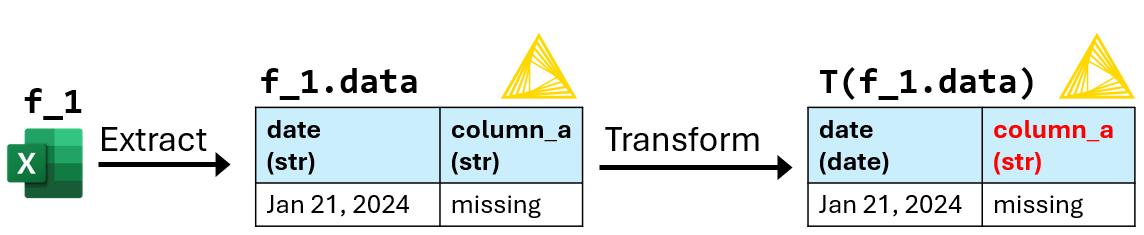

1. Execute ETL_program(set(f_1.name)):

T(f_1.data) can't be loaded into all_data because "missing" in column_a can't be converted to an integer.

2. Update T:

T:-

- Convert

dateto the date data type. - In

column_a, replace all cells matching the regular expression“^missing$”withnull. - Convert

column_ato the integer data type.

- Convert

3. Execute ETL_program(set(f_1.name)):

After updating T, T(f_1.data) is loaded into all_data.